Build a Python RAG App from Scratch: A Hands-On Guide

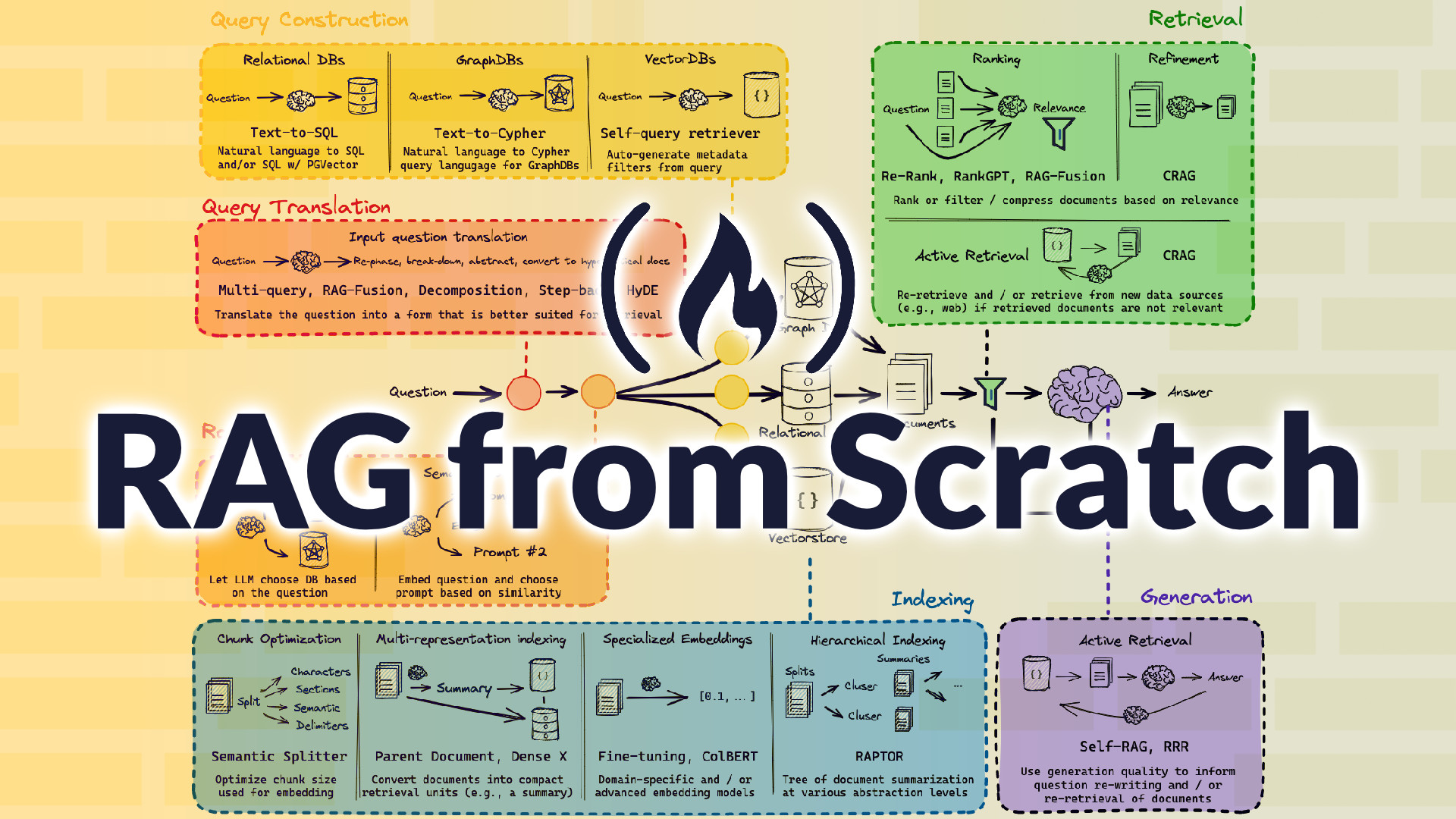

This guide walks you through building a Retrieval-Augmented Generation (RAG) application from scratch using Python. You’ll go from understanding the theory to deploying a functional text-based Q&A system using real documents and LangChain.

Retrieval-Augmented Generation (RAG) combines vector search with LLMs to ground answers in factual documents. Building one yourself is the best way to truly understand it.

🧠 Why Build It?

- You’ll understand how embeddings, retrieval, and generation work together

- Learn to integrate LangChain with vector stores like FAISS or Pinecone

- Move from passive learning to active, production-style workflows

📚 Recommended Resources LangChain Tutorial – Build a Retrieval-Augmented Generation (RAG) App: Part 1 A full code walkthrough of setting up a RAG pipeline using LangChain and FAISS. https://python.langchain.com/docs/tutorials/rag/?utm_source=chatgpt.com

SingleStore – RAG Tutorial: A Beginner's Guide Helps ground theory in a practical use case (e.g., Q&A over documents). https://www.singlestore.com/blog/a-guide-to-retrieval-augmented-generation-rag/?utm_source=chatgpt.com

🛠️ Step-by-Step Project

- Ingest Content : Load text files or PDFs and split them into chunks using LangChain’s TextSplitter.

- Embed Chunks : Use OpenAI, Hugging Face, or SentenceTransformers to create embeddings.

- Store in Vector DB : Use FAISS or Qdrant to store and index the embeddings.

- Retrieve with User Query : Accept a user query, embed it, and retrieve similar chunks.

- Feed into LLM : Combine query and retrieved context into a prompt for an LLM.

- Return Answer : Stream or display the response to the user.

🧪 Reflect Are your answers grounded in the context retrieved? Can you trace citations to specific source docs? Do you know which part of the pipeline (retrieval or generation) needs tuning?